APSW is my Python SQLite wrapper that glues together the Python C API and the SQLite C API, so you can use SQLite from Python.

It has now been 20 years since I first released APSW! That means time for a retrospective.

Genesis

In 2003 I got a new cell phone back when they were very small screens and physical keyboards of only the numbers. Trying to maintain contacts and other information was painful so I ended up creating BitPim which did all the work over a USB cable. It was implemented in Python using wxPython for the GUI.

Behind the scenes it needed to store information about contacts, calendars etc so I used the most expedient format possible - a Python dictionary (keys and values). It wasn't very large (many phones had limits of 100 contacts and calendar events).

It was trivial to read and write the dictionary to disk as is, and substantially similar to JSON. That also made it easy to edit, backup, compare etc outside of BitPim. But two things annoyed me...

1. You did manual save and load from the menus. That was completely normal for programs of the time, where the user was expected to manage the movement of data storage between permanent (ie still present after power events and reboots) and transient (ie in the memory of the process only). This sort of thing should be automatic, and thankfully is the majority of time these days.

2. I wanted undo and redo, but not just the simple approach commonly taken. I wanted to see what the data looked like at any point in time, a simple example being to see what a contact's phone number was 7 months ago. No information should ever be lost or deleted, and you should easily be able to recover older values.

It was getting later in 2004 and I had to solve this. The solution was obviously structured data, which meant a database.

Storage Options

The various networked databases were not an option. They were large and feature full, required complex configuration including administrators, users, and permissions, and dwarfed the size of BitPim. Installation instructions would be a nightmare especially since BitPim is cross platform and easy to install and run.

There were a family of local key value stores generally called dbm, which in theory could work, but would involve creating my own layer on top to structure and version data, execute queries, and similar work. In addition dbm often weren't available on Windows.

SQLite

The first release of SQLite in late 2000 had used dbm as the storage backend and added a layer of SQL on top. SQLite was intended as a developer alternative when the "real" database wasn't available. SQLite 2 released a year later dropped dbm for a custom btree storage engine. SQLite 1 and 2 only stored strings behind the scenes. (The hard part of storage is getting transactions right.)

In mid 2004 SQLite 3 was released and was a real local database, having learned the lessons from SQLite 2. It included:

- More effective file format

- Unicode strings

- Typed storage in the backend (ie not just strings), including binary objects

- Bound parameters

- Better transaction locking model

- Collations

- 64 bit rowids

- More advanced query optimisation

- Improvements in SQL compliance, error reporting etc

This was the obvious perfect fit for BitPim storage.

pysqlite

The existing Python binding to SQLite was named pysqlite. It had supported the earlier SQLite versions and upgraded to SQLite 3. (It was eventually adopted as the standard library sqlite3 module.) I started using it in BitPim, but quickly encountered several issues.

DBAPI

DBAPI / PEP249 defines a standard abstraction for accessing databases from Python, which does map well onto the common networked databases. It does not map well onto SQLite, so pysqlite had to resort to parsing SQL text to manage transactions. The exceptions didn't map well to SQLite's errors. I felt like I was fighting it just trying to do normal SQLite things, and had no interest in other databases.

Unicode

Unicode strings had been a new feature in Python 2 and SQLite 3, and pysqlite mostly got them right, there were a few places that were not, and doing so could cause backwards compatibility problems.

Threading

SQLite at that time could not be used across threads. Because BitPim had a GUI it meant the main thread was used for the GUI, and work was done in background threads. Even if you are diligent, destructors can run in any thread. It was important to me that cross thread usage was caught and became an error, and not silently ignored.

Ergonomics

The cursor was not an iterator so you couldn't use

for row in cursor.execute('...')which was Pythonic. I added an iterator wrapper but it made the cursor a lot slower.An annoyance (that still persists) is you could only execute one statement at a time. Multiple semi-colon separated statements in a query string gives an error.

Tracing and debugging

There was no support for tracing queries or returned rows. This is important during development.

Because SQLite does all its work in C, it is highly desirable to see what Python values it is working with especially when errors occur. You got no clue.

Error handling

Both SQLite and Python have error indicators. SQLite uses an integer code and error string, while Python uses a thread local pending exception. If a callback had an exception then all you got was an exception with the message user-defined function raised exception which was not useful. (Which function? What exception?) Even today all you get is this.

I'll write my own

It was apparent that many of these issues would not be addressed ever (DBAPI compliance was important), or could be done in the timeline I needed for BitPim. Since BitPim would only ever use SQLite, there was absolutely no need for abstractions, and I wanted to have every feature of SQLite available to me.

Naming things

At the time it looked there would be many SQLite wrappers, especially as some supported SQLite 2. I wanted an alternative that did things the SQLite way, and imaginatively came up with Another Python SQLite Wrapper, expecting it to be one of many. It ended up being one of two.

Coding

I recall it taking about a month to code up the first release. That involved mapping between the SQLite C API and the Python C API. An early decision I made was that the module would only be C code. Even today sqlite3 is split between Python code and C code.

Back then it would have meant potential deployment problems having to keep the Python files and compiled C extension in sync. By having only one output file that was not a problem that could occur.

It was then a matter of supporting SQLite versions and Python versions. It was only in January 2022 that I removed support for Python 2.3 which was the version current in 2004 when the project started!

Today APSW does consist of both Python code and compiled C code, and being out of sync is not an issue.

BitPim

It was a simple matter to switch BitPim from dictionaries on disk to dictionaries from SQLite. I was delighted to add undo and data version history so that data would never be lost from that point on.

Principles

I didn't know it at the time, but some principles ended up happening over the years.

Dependencies

It is easy and often a good idea to use third party packages for functionality you need. PyPI has 600 thousand packages covering almost anything you can think of. When APSW started the ecosystem was a lot less mature, and depending on third party packages was a lot more difficult.

To this day APSW has no runtime third party packages, and for compilation and documentation uses the de facto Python standards, described below. The standard library is used.

Overall that was the right decision because it means I am responsible for every line of code, which means I can ensure the appropriate levels of testing, documentation, and interoperability.

Version Churn

SQLite kept updating, and Python kept updating. To make best use of SQLite I decided to keep up with updates, and eventually decided that everything you could do from C you should be able to do from Python. After all SQLite was adding things for a reason. I also decided to have APSW match the corresponding version of SQLite - ie you wouldn't be able to use an old version of SQLite with a new version of APSW. To do so would hugely increase the combinations of testing needed. However you can use new SQLite with old version of APSW just fine.

For 17 years I added support for each new version of Python, and never dropped any. Python used to have what felt like an arbitrary release schedule, with the 3.8 release in October 2019 finally bringing it on to a predictable annual schedule. It was also increasingly difficult to support the older versions, and unnecessary because modern practice is not to support end of life software.

I did try to keep breaking changes to code using APSW to a minimum. Other than corners of error handling, I believe there has been no change causing user code churn.

Tooling

I haver a fair amount of tooling code. This is to reduce manual maintenance such as keeping code and documentation in step, source code checking such as checking that Connections, Cursors, blobs etc are all verified to be open before functions operate on them, getting an API list from the SQLite website to verify all APIs and constants are used, and even spelling checking where mis-spellings are added whenever they are found. All of the tooling is full of assertions and errors on any uncertainty.

This has turned out excellent for project maintenance because it avoids even minor oversights.

Source Code Control

I originally hosted everything on my web site, and used CVS for version control. Then things moved to SourceForge with Subversion source control. Then it was Google Code hosting with Mercurial source control. The final move was GitHub and git source control.

Despite all those transitions, full fidelity of the the source changes is available back to April 2006. The changelog goes all the way back to the beginning.

Documentation

The documentation was originally hand written HTML. It worked but meant there was no way to programmatically ensure it was complete and up to date. I was an early adopter of Sphinx which has been fantastic.

Behind the scenes I have comments in C source that are extracted to make documentation and help text, tools to check the entire SQLite API is wrapped, tools to help with type annotations in the doc, and several other doc related scripts.

Building

When I started the Python standard library included distutils which could be used for both compiled code and pure Python packages. The Python Packaging Authority now maintains a descendent for compiled code named setuptools which still works well for the purpose.

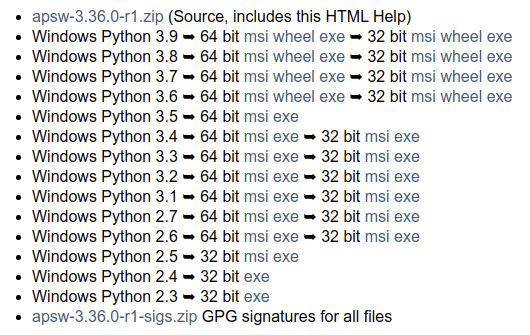

Perhaps the best thing they do is cibuildwheel which is able to compile and test the code under the supported Python versions, operating systems, chip architectures, and ABIs (eg musl vs glibc on Linux). It produces 50 different builds making it trivial for anyone with any of those combinations to install APSW.

Testing

For a few months I had a Python file that run exercising each of the APIs. I quickly moved to the standard library unittest and still use that (many third party frameworks have come and gone).

Very little of the testing code is about things that work, as both SQLite and Python fundamentally work and are themselves tested. The vast majority is API boundaries, and errors and exceptions. It gets especially gnarly because multiple exceptions can occur inside the top level call into SQLite (eg VFS error recovery, finalizers, callbacks). For Python 2 support I was stuck with unraisable errors, while, while Python 3 added chained exceptions.

I used coverage analysis of both Python and C code to make sure all the error/exception conditions were caused. That wasn't sufficient because there are functions such as allocating small amounts of memory, Python objects, or operations like appending to a list that are practically impossible to make fail. I used to have to wrap each location in a macro so that I could cause failure on demand, but that was tedious. It also meant the code didn't look normal, and was obfuscated for editors and analyzers.

Finally statement expressions came to the rescue, where I could macroize calls without having to do something manual in each location. The statement expression would query if that location (file, line, arg list) should fail and how, allowing my harness to then iterate through all failure locations. There are almost two thousand locations. This gets combined with a debug build of Python that does far more assertions and error checking to catch code issues - the most likely being calling into the Python C API while an exception is pending.

Because C code is involved, that means memory issues. I used valgrind to check everything was good. Its cachegrind mode is used for detailed profiling. Sanitizers significantly improved the testing because they saw the C level source code and so better catch issues - valgrind interprets the executable and so has to deduce what is going on.

Overall adding error handling to the code, and the corresponding testing is the vast majority of any code changes - usually three times as much as the actual normal code path. The good news is this gives a lot of assurance errors are handled well, even though most users are unlikely to ever encounter them!

A release test today involves:

- Running my checking tools that looks for code issues, missing SQLite APIs etc

- Running coverage to check all lines of C and Python (including the test suite itself) are run by testing

- 32 and 64 bit build and test of all supported Python versions on Windows, because it is most likely to have differences

- Megatest of all these permutations under Linux

- 32 and 64 bit

- Every supported Python version

- All supported SQLite versions

- Python in regular build, and in debug, assertions, all warnings build

- APSW in regular and debug build (including SQLite debug/assertions configuration)

- Default APSW SQLite configuration (everything on), and Debian default SQLite configuration (only some things on)

- The Github actions build then also tests the build on each operating system and configuration

It is very rare for the tests to catch any problems, and is delightful when they do.

Python code

For the longest time APSW was almost 100% C code. It was just gluing together the C APIs. Several years ago I realised that users were trying to do higher level things, and it was preferable to go beyond documentation, and also add that as a standard part of APSW.

I'm very pleased that best practices has been popular - almost all queries and code shows it being used.

The ext module is a nice collection of things. My favourite is three lines of code turns any Python function with positional and keyword arguments into a virtual table.

Size

The video below shows how the source tree has changed over time. It is often the same set of files for each release, updating C code, Python test code, tools, and documentation.

Lines of code is a silly metric, like weighing art, but is correlated with functionality, complexity, effort etc. Here are some comparisons between early 2006 when APSW was functionally complete, and the end of 2024 when it is very complete.

| Kind | 2006 | 2024 |

|---|---|---|

| C source files | 1 | 20 |

| Lines of C source | 2,461 | 15,901 |

| Python files (tools, tests, everything) | 3 | 41 |

| Lines of Python | 764 | 19,486 |

| Lines in main test | 652 | 8,800 |

| All test code | 652 | 11,992 |

| Documentation files | 1 | 27 |

| Lines of documentation | 1,200 | 10,400 |

| Makefile targets | 5 | 46 |

Popularity Contest

So just how popular is APSW?

There are 750 stars on GitHub putting it around position 45 thousand.

APSW is available packaged in most Linux, BSD, and even termux (Android).

From PyPI there are a million monthly downloads which puts it in the two thousands of all PyPI projects.

Final words

I've seen it said that project maintenance is a lot like gardening. You need to do occasional weeding and pruning, spruce things up, and generally just keep your hand in. I agree.

It has kept me up to date on Python's C API as well as Python library (I'm a fan of dataclasses). SQLite's changes (click the right column) have also been interesting, and I'm glad I did the work to wrap extensions like FTS5.

The long list of differences to the standard module shows the work continues to be worth doing.