APSW is my Python wrapper for SQLite's C interface gluing it to Pythons C interface.

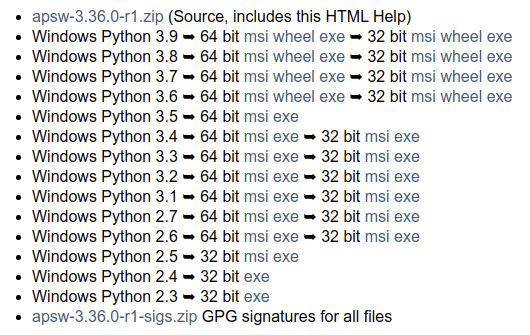

Contents

Looking back

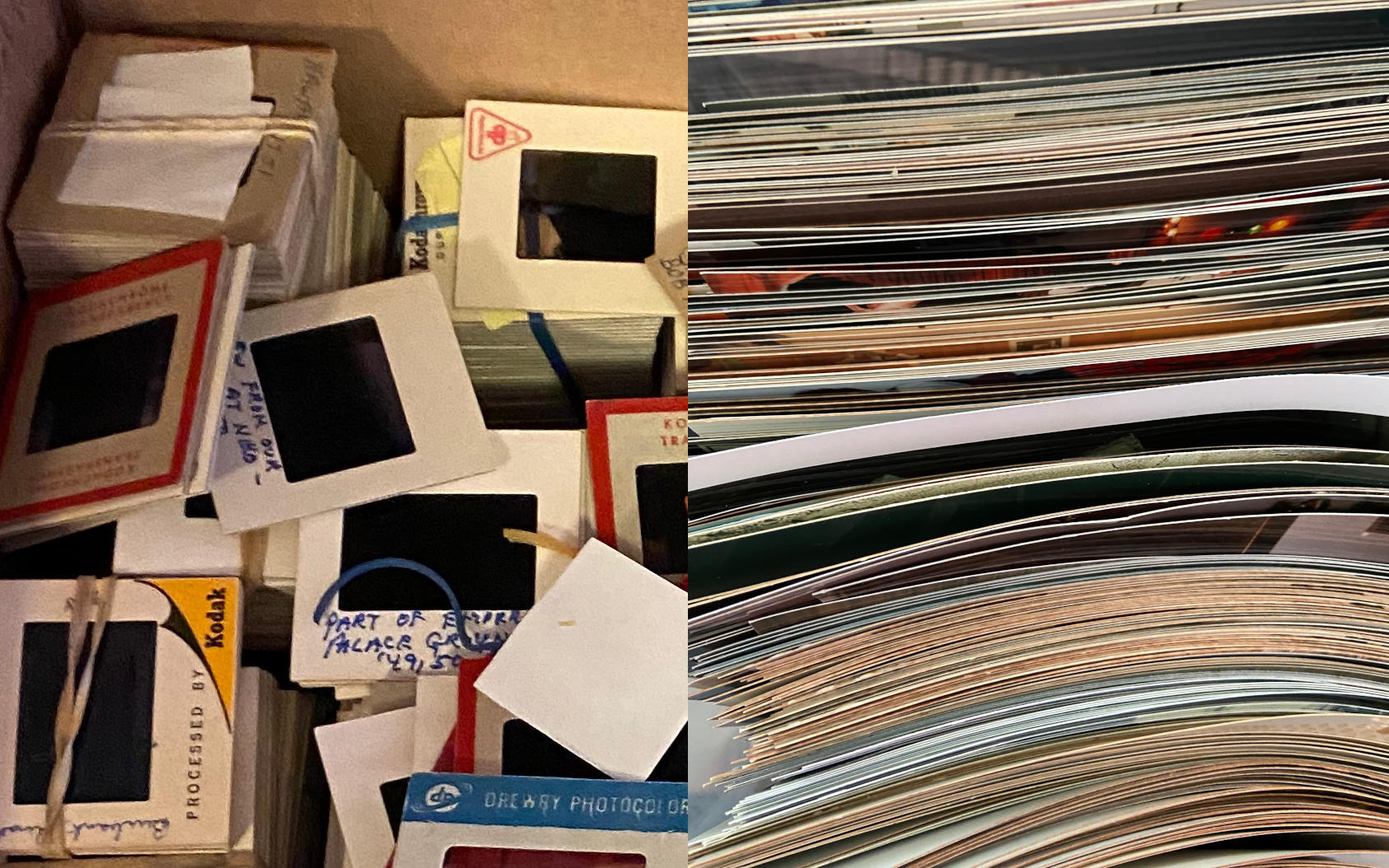

It has been two years since I ended Python 2 and early Python 3 support. While I was proud that you could continue to use any Python version of the previous two decades with corresponding SQLite versions and have your code continue to work without maintenance, it isn't how modern software development works.

Components interact with other components, which in turn do the same. There are many additional tools such as build systems, test systems, documentation generation, and cloud based full scale system integration verification. It is practically impossible to make all versions of all the things work with all the other versions of the other things, even if my little corner did.

I ended up with a simple end of life policy - I do one more release after a particular Python version goes end of life. That means APSW will not be the weak link.

Opportunity

Only supporting modern Python versions let me delete a bunch of C and Python code, and some documentation. Non developers may not realise it, but one of the great joys of being a developer is when you get to delete stuff. That frees up brain space for better things. The last things removed were some documentation saying that strings are Unicode (a legacy of Python 2), and removing the u prefix from some strings (like u"hello world") for the same reason. It felt good.

I could also take advantage of dataclasses in Python code and FASTCALL in C code. This week I greatly appreciated the walrus operator in some new code. I even had problems in some example code that needed Python to consume a noticeable amount of CPU time, but performance enhancements made that more difficult!

Most important code

If you change code for the better, there is always a probability you will have broken something else without realising it. This is why developers are wary of changing code, instead adding more, or copying and pasting.

That makes the tests the most important code by far. APSW's test code is a similar size to the C code, and tries to exercise all possible routes through the C. In addition to checking everything works, far more effort is expended on doing everything wrong, and ensuring that all combinations of problems that could happen do happen.

That thoroughness of the test code is what made it possible to reduce and improve the code base. It would have been essentially impossible otherwise.

When a new API is added to SQLite, my rough estimates on effort are:

- 5% - Calling the API

- 15% - Adding error handling, especially all the things that could go wrong. More on this below.

- 5% - Updating documentation

- 75% - Updating the test suite, exercising all the paths, running under all the validation tools and analyzers

Most important code, part 2

It may not seem like much, but most of the start of C functions looks like this:

/** .. method:: __init__(filename: str,

flags: int = SQLITE_OPEN_READWRITE | SQLITE_OPEN_CREATE,

vfs: Optional[str] = None,

statementcachesize: int = 100)

Opens the named database ...

Of course that isn't code - it is a comment before the actual C code. It serves many duties.

- Documentation

- I use Sphinx for documentation, and that text ends up in the documentation in restructured text format.

- Docstring

- All Python objects have documentation, easily done in Python code. For C implemented objects the text has to be available to the C compiler, so the text is extracted and available in a header file processed to keep within C syntax.

- Text signature

- C objects can have a __text_signature__ used by inspect which is in yet another format with dollar signs and no return types. This StackOverflow answer has some more details.

- Argument parsing

- The str and int and default values are all relevant when you need C values to call into SQLite (const char *, and int or long long respectively). I used to use PyArg_ParseTupleAndKeywords which takes a format string with many Python extras, and escapes for your own conversions. Because the format string could disagree with the documented string, I have a tool that converts the comment into the correct defaults and formats. When I adopted FASTCALL it was then a simple matter of generating code to do the parsing directly. It also meant I can produce far better clearer error messages. (There is a Python internal use tool that is similar.)

- Type stubs

- For Python code implemented in C, you can provide type stubs which are Python syntax for those C implemented items. I discovered that Visual Studio Code would display any docsrings included with the typing information, so the APSW type stubs include that too. When editing Python code, you can't tell that APSW is implemented in C.

That is a lot of heavy lifting for some "code".

MVP tool: cvise

cvise is a tool that takes a C file known to cause a problem, and reduces the size while verifying it still causes the problem.

While reworking the code base, I could easily detect problems. There are many places where C code calls Python which calls C code which calls Python, with the flow going through CPython's internals, APSW's code, and SQLite's code. All 3 projects have copious amounts of assertion checking so it is easy to detect something has happened that shouldn't.

I'd be able to cause problems using my 10,000 line test suite, but to narrow down and understand it you want a lot less, ideally less than 10 lines. Trying to do so manually is tedious and time consuming, and often the actual nature of the problem is different than you think it is.

cvise takes a --not-c flag, so I could feed it my test suite, which would rapidly hack it down to just enough to still reproduce the problem. It was always surprising and delightful, because doing that manually is very tedious.

CPython API

There has been a lot of work behind the scenes to clean up the APIs. I ended up with backport code to make newer apis available on older supported Pythons.

My favourite has been Py_NewRef which let many places go from two lines of code to one, and is also easy to search for.

Fastcall/Vectorcall

This was the most impactful. Vectorcall is the faster way of making calls, and fastcall is the faster way of receiving calls. Traditionally calling from C code required building a tuple for positional arguments and a dictionary for keyword arguments.

// Old style - convenient but slow making a Python tuple PyObject_Call(object, "lssL", updatetype, databasename, tablename, rowid); // New style - directly put arguments in a C array PyObject *vargs[] = {NULL, PyLong_FromLong(updatetype), PyUnicode_FromString(databasename), PyUnicode_FromString(tablename), PyLong_FromLongLong(rowid)}; if (vargs[1] && vargs[2] && vargs[3] && vargs[4]) PyObject_Vectorcall(object, vargs + 1, 4 | PY_VECTORCALL_ARGUMENTS_OFFSET, NULL);

The PY_VECTORCALL_ARGUMENTS_OFFSET magic allows that first NULL element to be used by Python's internal machinery instead of having to allocate a new array. Python will automatically create a new tuple if the receiving C code does things the old way.

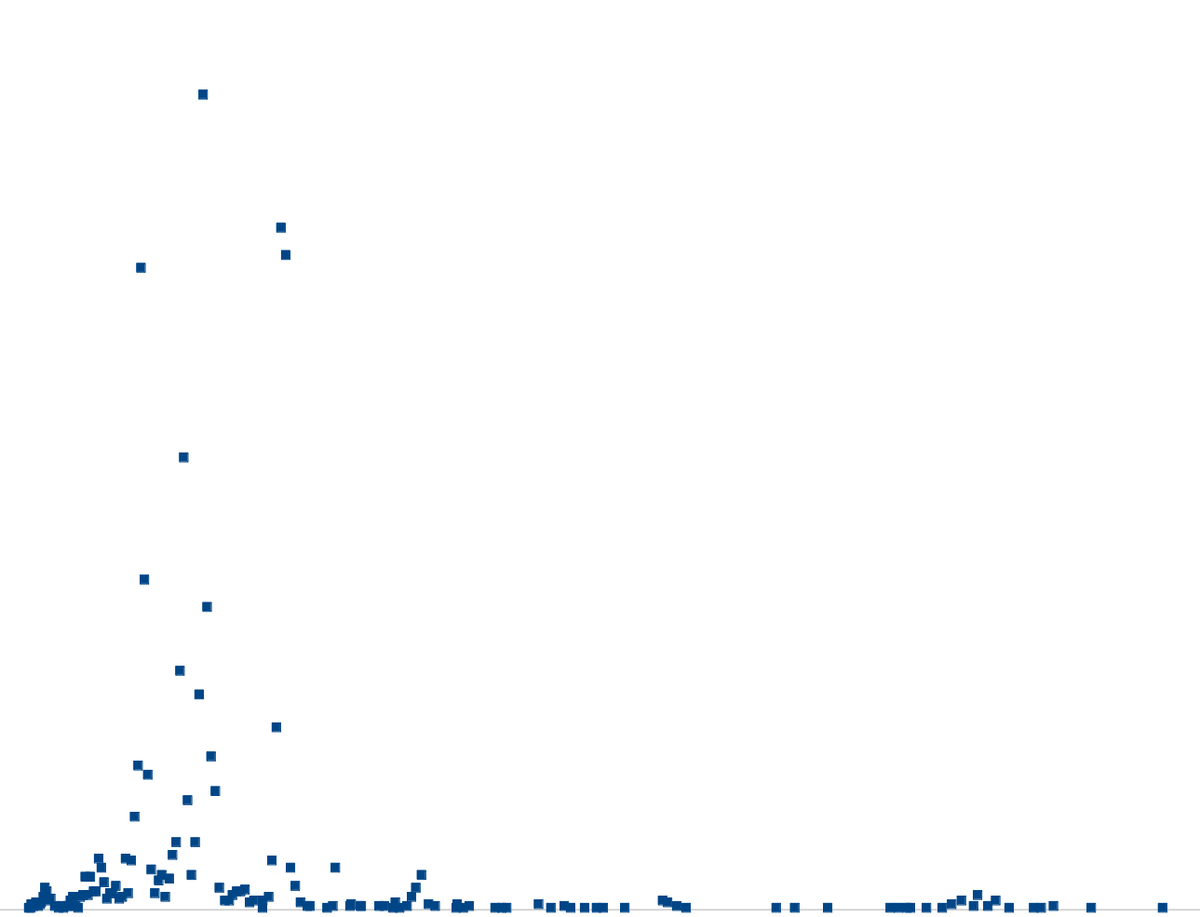

I was curious what the benefits are on the receiving end and made a project that did benchmarking, as well as answering some related questions. It took 22 time units to receive the new style, and 158 to do it the old way - 7 times slower! With each Python release the former has been getting quicker and the latter slower.

Error handling

Writing code in Python is delightful. You don't have to worry about errors, and in the rare circumstances they happen, your program is stopped with an exception. You can add code at whatever level of the call hierarchy is most relevant if you want code to handle future occurrences.

In C code it is a totally different matter. Different APIs return error information in different ways. Errors have to be handled immediately. As an example, here is the code to get SQLite's compilation options in a Python list of strings - a total of 4 lines of code.

PyObject *list = PyList_New(0); for(int i=0; sqlite3_compileoption_get(i); i++) PyList_Append(list, PyUnicode_FromString(sqlite3_compileoption_get(i))); return list;

Now lets add error checking, and it has grown from 4 to 14 lines.

PyObject *list = PyList_New(0); if(!list) // error indicated by NULL return return NULL; for(int i=0; sqlite3_compileoption_get(i); i++) { PyObject *option = PyUnicode_FromString(sqlite3_compileoption_get(i)); if(!option) // error indicated by NULL return { Py_DECREF(list); return NULL; } int append = PyList_Append(list, option); Py_DECREF(option); // list took a reference or failed if(append == -1) // error indicated by -1 return, or non-zero? { Py_DECREF(list); return NULL; } } return list;

Lots of repeated cleanups in error handling, so we resort to goto. It is the same number of lines of code, but a more useful pattern for more complex code when there are far more items needing cleanup.

PyObject *option = NULL, *list = PyList_New(0); if(!list) goto error; for(int i=0; sqlite3_compileoption_get(i); i++) { option = PyUnicode_FromString(sqlite3_compileoption_get(i)); if(!option) goto error; int append = PyList_Append(list, option); if(append == -1) goto error; Py_CLEAR(option); } return list; error: Py_XDECREF(list); Py_XDECREF(option); return NULL;

Note how doing error handling in C triples the code size, and this is calling one simple API. I estimate that around 75% of the C code in APSW is error handling. Here are the top 10 goto label names illustrating the point:

- 157 finally

- 75 error

- 57 pyexception

- 39 fail

- 18 param_error

- 11 end

- 8 errorexit

- 5 error_return

- 3 success

- 3 out_of_range

It gets worse

Looking back at the code above, the reason for failures would be running out of memory. Do you know how often those specific lines of code will be the ones that run out of memory?

NEVER

That's right - as a developer I had to write 3 times as much code as necessary, to handle conditions that will never happen in the real world.

The computers running this code also had to do all that extra checking for conditions that never happen. Your computer is doing that for all the software it is running - what a waste.

... and worse

Not only did I write all that extra code, I can't even make it fail, nor could you. A lot of code is shipped without it ever having been run by the developers, nor does it get run in production. If it ever did run there is no certainty it would do the right thing.

And this was a trivial example.

My reaction

There are however adversaries who are very good at at making things happen. Virtually every security issue you hear about it is because they have figured out the weakest bits of software, and how to tickle things just right so that kind of code does get executed.

The consequences of error handling not being done correctly are one or more of:

- Nothing

- Something that was expected to happen didn't

- Resources are leaked

- Corruption of state

- Memory corruption

- Corruption being exported into storage or the network

- Delays

- Infinite loops

- Invariants no longer holding

- Exposing private information

I don't want my code to be responsible for any of that.

How I test

I originally did it manually. The code looked something like this where FAIL is a macro taking the name of the location, the happy path, and the failure path. Testing would then set each name to fail, and execute the code.

PyObject *list; FAIL("CompileList", list = PyList_New(0), list = PyErr_NoMemory());

That was very tedious, makes the code unreadable, and the various editors and other tools couldn't understand it, format it etc.

Statement expressions to the rescue with generated code, given a list of function names with this for PyList_New:

1 #define PyList_New(...) \ 2 ({ \ 3 __auto_type _res_PyList_New = 0 ? PyList_New(__VA_ARGS__) : 0; \ 4 \ 5 _res_PyList_New = (typeof (_res_PyList_New))APSW_FaultInjectControl("PyList_New", __FILE__, __func__, __LINE__, #__VA_ARGS__); \ 6 \ 7 if ((typeof (_res_PyList_New))0x1FACADE == _res_PyList_New) \ 8 _res_PyList_New = PyList_New(__VA_ARGS__); \ 9 else if ((typeof(_res_PyList_New))0x2FACADE == _res_PyList_New) \ 10 { \ 11 PyList_New(__VA_ARGS__); \ 12 _res_PyList_New = (typeof (_res_PyList_New))18; \ 13 } \ 14 _res_PyList_New; \ 15 })

- Line 3 sets up a variable to store the return value without knowing what the return type is

- Line 5 calls APSW_FaultInjectControl giving the function name, filename, calling function name, line number, and stringized arguments. The combination of all those uniquely identifies a location even when there are multiple calls on the same line.

- Line 7 looks for the 0x1FACADE return value to mean go ahead and call the function normally as seen on line 8.

- Line 9 looks for the 0x2FACADE return value to mean go ahead and call the function, but pretend it returned 18. This is necessary for closing functions because I do want them to close. 18 is a valid SQLite error code.

- Line 14 provides the final value which came from the call on line 5 unless that returned 0x1FACADE/ 0x2FACADE.

There is a little more to it, but this lets me cause all the various calls to fail in various ways and have all that error checking code I wrote actually run.

Yes it found bugs that static analysis can't because of all the calling between CPython, SQLite, and APSW. Python has an error indicator that does cause some internal routines to behave differently when it is set. For example they may short circuit and return immediately doing nothing, or they may clear the indicator hiding that an error happened. The main interpreter loop gets upset when the indicator is set and C code returns values as though there were no problems.

I feel better knowing all my code runs, handles errors correctly, and that the errors never get hidden. (And yes I am proud of my magic hex constants.)

Python type annotations

Python always let you rapidly develop code without having to be excruciating precise in details. Duck typing is wonderful. But there has been an increasing tension because there are more and more components to interact with, and there is greater version churn (see the start of this post!).

In the olden days you referred to a component's documentation and memorized what you used most frequently. That isn't practical any more. The question is "when do you want to know about problems in the code?" The answer is as soon as possible, as it gets more expensive (time, effort, and often money) the later you find out, with the worst case being once customers depend on it. Ideally you want to know as your finger rises from typing something.

Type annotations have let that happen, especially for simpler problems. There are annotations for all of APSW's C and Python code (except the shell which is on the todo list). I've found it quite difficult to express duck typing, and some concepts are impossible like the number of arguments to a callable depends on a parameter when some other function was called. But I appreciate the effort made by all the tools, and it does save me effort as I type.

You do however still get amusing error messages for correct code due to limitations of annotations and the tools. It is reminiscent of C++ template errors. I leave you with one example you should not read, deliberately left as one long line and a scrollbar.

example-code.py:95: error: Argument 1 to "set_exec_trace" of "Cursor" has incompatible type "Callable[[Cursor, str, Union[Sequence[Union[None, int, float, bytes, str]], Dict[str, Union[None, int, float, bytes, str]]]], bool]"; expected "Optional[Callable[[Cursor, str, Union[Dict[str, Union[None, int, float, bytes, str]], Tuple[Union[None, int, float, bytes, str], ...], None]], bool]]"